|

aircraft engine

history

Piston Engine development

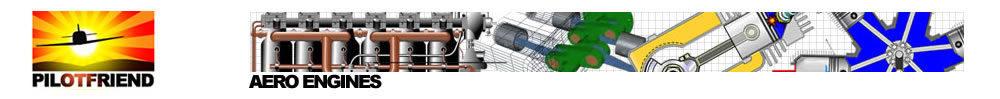

Picture a tube or cylinder that holds a snugly fitting plug.

The plug is free to move back and forth within this tube,

pushed by pressure from hot gases. A rod is mounted to the

moving plug; it connects to a crankshaft, causing this shaft

to rotate rapidly. A propeller sits at the end of this shaft,

spinning within the air. Here, in outline, is the piston

engine, which powered all airplanes until the advent of jet

engines.

Pistons in cylinders first saw use in steam engines.

Scotland's James Watt crafted the first good ones during the

1770s. A century later, the German inventors Nicolaus Otto and

Gottlieb Daimler introduced gasoline as the fuel, burned

directly within the cylinders. Such motors powered the

earliest automobiles. They were lighter and more mobile than

steam engines, more reliable, and easier to start.

Some single-piston gasoline engines entered service, but for

use with airplanes, most such engines had a number of pistons,

each shuttling back and forth within its own cylinder. Each

piston also had a connecting rod, which pushed on a crank that

was part of a crankshaft. This crankshaft drove the propeller.

Cutaway view of a piston engine built by Germany's Gottlieb

Daimler. Though dating to the 19th century, the main features

of this motor appear in modern engines.

Engines built for airplanes had to produce plenty of power

while remaining light in weight. The first American

planebuilders—Wilbur and Orville Wright, Glenn Curtiss—used

motors that resembled those of automobiles. They were heavy

and complex because they used water-filled plumbing to stay

cool.

A

French engine of 1908, the "Gnome," introduced air cooling as

a way to eliminate the plumbing and lighten the weight. It was

known as a rotary engine. The Wright and Curtiss motors had

been mounted firmly in supports, with the shaft and propeller

spinning. Rotary engines reversed that, with the shaft being

held tightly—and the engine spinning! The propeller was

mounted to the rotating engine, which stayed cool by having

its cylinders whirl within the open air.

Numerous types of Gnome engines were designed and built, one

of the most famous being the 165-hp 9-N "Monosoupape" (one

valve). It was used during WWI primarily in the Nieuport 28.

The engine had one valve per cylinder. Having no intake

valves, its fuel mixture entered the cylinders through

circular holes or "ports" cut in the cylinder walls. The

propeller was bolted firmly to the engine and it, along with

the cylinders, turned as a single unit around a stationary

crankshaft rigidly mounted to the fuselage of the airplane.

The rotary engine used castor oil for lubrication.

During World War I, rotaries attained tremendous popularity.

They were less complex and easier to make than the

water-cooled type. They powered such outstanding fighter

planes as German's Fokker DR-1 and Britain's Sopwith Camel.

They used castor oil for lubrication because it did not

dissolve in gasoline. However, they tended to spray this oil

all over, making a smelly mess. Worse, they were limited in

power. The best of them reached 260 to 280 horsepower (190 to

210 kilowatts).

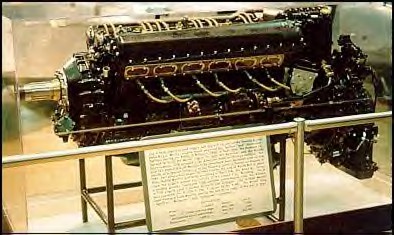

America's greatest technological contribution during WWI was

the Liberty 12-cylinder water-cooled engine. Rated at 410 hp.

,

it weighed only two pounds per horsepower, far surpassing

similar types of engines mass-produced by England, France,

Italy, and Germany at that time.

Thus, in 1917 a group of American engine builders returned to

water cooling as they sought a 400-horsepower (300-kilowatt)

engine. The engine that resulted, the Liberty, was the most

powerful aircraft engine of its day, with the U.S. auto

industry building more than 20,000 of them. Water-cooled

engines built in Europe also outperformed the air-cooled

rotaries, and lasted longer. With the war continuing until

late in 1918, the rotaries lost favor.

In this fashion, designers returned to water-cooled motors

that again were fixed in position. They stayed cool by having

water or antifreeze flow in channels through the engine to

carry away the heat. A radiator cooled the heated water. In

addition to offering plenty of power, such motors could be

completely enclosed within a streamlined housing, to reduce

drag and thus produce higher speeds in flight. Rolls Royce,

Great Britain's leading engine-builder, built only

water-cooled motors.

Air-cooled rotaries were largely out of the picture after

1920. Even so, air-cooled engines offered tempting advantages.

They dispensed with radiators that leaked, hoses that burst,

cooling jackets that corroded, and water pumps that failed.

Thus, the air-cooled "radial engine" emerged. This type of

air-cooled engine arranged its cylinders to extend radially

outward from its hub, like spokes of a wheel. The U.S. Navy

became an early supporter of radials, which offered

reliability along with light weight. This was an important

feature if planes were to take off successfully from an

aircraft carrier's flight deck.

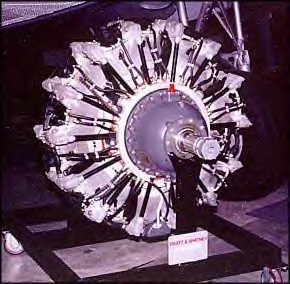

With financial support from the Navy, two American firms,

Wright Aeronautical and Pratt & Whitney, began building

air-cooled radials. The Wright Whirlwind, in 1924, delivered

220 horsepower (164 kilowatts). A year later, the Pratt &

Whitney Wasp was tested at 410 horsepower (306 kilowatts).

Aircraft designers wanted to build planes that could fly at

high altitudes. High-flying planes could swoop down on their

enemies and also were harder to shoot down. Bombers and

passenger aircraft flying at high altitudes could fly faster

because air is thin at high altitudes and there is less drag

in the thinner air. These planes also could fly farther on a

tank of fuel.

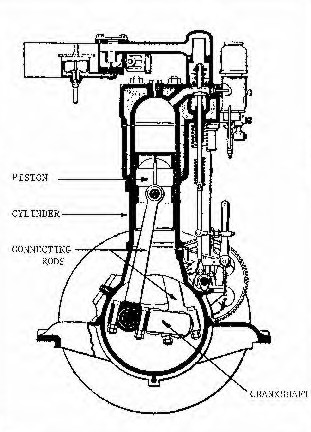

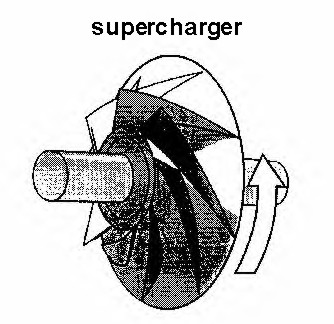

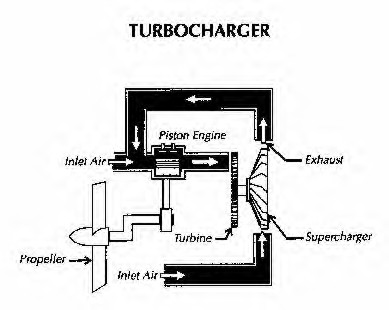

The supercharger,

spinning within a closely fitted housing (not shown), pumped

additional air into aircraft piston engines.

But because the air was thinner, aircraft engines produced

much less power. They needed air to operate, and they couldn't

produce power unless they had more air. Designers responded by

fitting the engine with a "supercharger." This was a pump that

took in air and compressed it. The extra air, fed into an

engine, enabled it to continue to put out full power even at

high altitude.

A supercharger needed power to operate. This power came from

the engine itself. The supercharger, also called a centrifugal

compressor,

drew air through an inlet. It compressed this air

and sent it into the engine. Similar compressors later found

use in early jet engines.

Early superchargers underwent tests before the end of World

War I, but they were heavy and offered little advantage. The

development of superchargers proved to be technically

demanding, but by 1930, the best British and American engines

installed such units routinely. In the United States,

the Army funded work on superchargers at another

engine-builder, General Electric. After 1935, engines fitted

with GE's superchargers gave full power at heights above

30,000 feet (9,000 meters).

Fuels for aviation also demanded attention. When engine

designers tried to build motors with greater power, they ran

into the problem of "knock." This had to do with the way fuel

burned within them. An airplane engine had a carburettor that

took in fuel and air, producing a highly flammable mixture of

gasoline vapour with air, which went into the cylinders.

There, this mix was supposed to burn very rapidly, but in a

controlled manner. Unfortunately, the mixture tended to

explode, which damaged engines. The motor then was said to

knock.

Poor-grade fuels avoided knock but produced little power. Soon

after World War I, an American chemist, Thomas Midgely,

determined that small quantities of a suitable chemical added

to high-grade gasoline might help it burn without knock. He

tried a number of additives and found that the best was

tetraethyl lead. The U.S. Army began experiments with leaded

aviation fuel as early as 1922; the Navy adopted it for its

carrier-based aircraft in 1926. Leaded gasoline became

standard as a high-test fuel, used widely in automobiles as

well as in aircraft.

The Pratt and

Whitney R-1830 Twin Wasp engine was one of the most efficient

and reliable engines of the 1930s.

It was a "twin-row" engine. Twin-row engines powered the

warplanes of World War II.

Leaded gas improved an aircraft engine's performance by

enabling it to use a supercharger more effectively while using

less fuel. The results were spectacular. The best engine of

World War I, the Liberty, developed 400 horsepower (300

kilowatts). In World War II, Britain's Merlin engine was about

the same size—and put out 2,200 horsepower (1,640 kilowatts).

Samuel Heron, a long-time leader in the development of

aircraft engines and fuels, writes that "it is probably true

that about half the gain in power was due to fuel."

The V-1650 liquid-cooled engine was the U.S. version of the

famous British Rolls-Royce "Merlin" engine

which powered the "Spitfire" and "Hurricane" fighters during

the Battle of Britain in 1940.

During World War II, the best piston engines used a

turbocharger. This was a supercharger that drew its power from

the engine' hot exhaust gases. This exhaust had plenty of

power, which otherwise would have gone to waste. A turbine

tapped this power and drove the supercharger. Similar turbines

later appeared in jet engines.

These advances in supercharging and knock-resistant fuels laid

the groundwork for the engines of World War II. In 1939, the

German test pilot Fritz Wendel flew a piston-powered fighter

to a speed record of 469 miles per hour (755 kilometres per

hour). U.S. bombers used superchargers to carry heavy bomb

loads at 34,000 feet (10,000 meters). They also achieved long

range, the B-29 bomber had the range to fly non-stop from

Miami to Seattle. Fighters routinely topped 400 miles per hour

(640 kilometers per hour). Airliners, led by the Lockheed

Constellation, showed that they could fly non-stop from coast

to coast.

The Wasp Major engine was developed during World War II though

it only saw service late in the war

on some B-29 and B-50 aircraft and after the war. It

represented the most technically advanced and complex

reciprocating engine produced in large numbers in the United

States. I

t was a four-row engine, meaning it had four

circumferential rows of cylinders.

By 1945, the jet engine was drawing both attention and

excitement. Jet fighters came quickly to the forefront.

However, while early jet engines gave dramatic increases in

speed, they showed poor fuel economy. It took time before

engine builders learned to build jets that could sip fuel

rather than gulp it. Until that happened, the piston engine

retained its advantage for use in bombers and airliners, which

needed to be able to fly a great distance without refuelling.

Pratt & Whitney was the first to achieve high thrust with good

fuel economy. Its J-57 engine, which did these things, first

ran on a test stand in 1950. Eight such engines powered the

B-52, a jet bomber with intercontinental range that entered

service in 1954. Civilian versions of this engine powered the

Boeing 707 and Douglas DC-8, jet airliners that began carrying

passengers in 1958 and 1959, respectively. In this fashion,

jet engines conquered nearly the whole of aviation.

|